Within 10 minutes of its birth, a baby fawn is able to stand. Within seven hours, it is able to walk. Between those two milestones, it engages in a highly adorable, highly frenetic flailing of limbs to figure it all out.

That’s the idea behind AI-powered robotics. While autonomous robots, like self-driving cars, are already a familiar concept, autonomously learning robots are still just an aspiration. Existing reinforcement-learning algorithms that allow robots to learn movements through trial and error still rely heavily on human intervention. Every time the robot falls down or walks out of its training environment, it needs someone to pick it up and set it back to the right position.

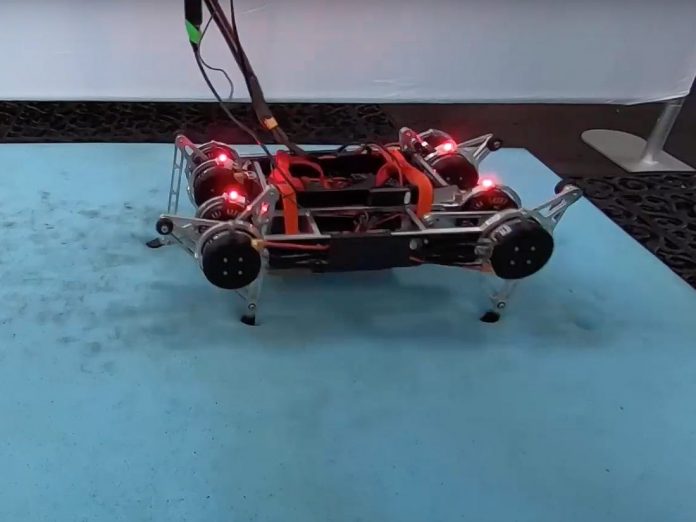

Now a new study from researchers at Google has made an important advancement toward robots that can learn to navigate without this help. Within a few hours, relying purely on tweaks to current state-of-the-art algorithms, they successfully got a four-legged robot to learn to walk forward and backward, and turn left and right, completely on its own.

The work builds on previous research conducted a year ago, when the group first figured out how to get the robot to learn in the real world. Reinforcement learning is commonly done in simulation: a virtual doppelgänger of the robot flails around a virtual doppelgänger of the environment until the algorithm is robust enough to operate safely. It is then imported into the physical robot.

This method is helpful for avoiding damage to a robot and its surroundings during its trial-and-error process, but it also requires an environment that is easy to model. The natural scattering of gravel or the spring of a mattress under a robot’s footfall take so long to simulate that it’s not even worth it.

In this case the researchers decided to avoid modeling challenges altogether by training in the real world from the beginning. They devised a more efficient algorithm that could learn with fewer trials and thus fewer errors, and got the robot up and walking within two hours. Because the physical environment provided natural variation, the robot was also able to quickly adapt to other reasonably similar environments, like inclines, steps, and flat terrain with obstacles.

But a human still had to babysit the robot, and manually interfere hundreds of times, says Jie Tan, a paper coauthor who leads the robotics locomotion team at Google Brain. “Initially I didn’t think about that,” he says.

So they started solving this new problem. First, they bounded the terrain that the robot was allowed to explore and had it train on multiple maneuvers at a time. If the robot reached the edge of the bounding box while learning how to walk forward, it would reverse direction and start learning how to walk backward instead.

Second, the researchers also constrained the robot’s trial movements, making it cautious enough to minimize damage from repeated falling. During times when the robot inevitably fell anyway, they added another hard-coded algorithm to help it stand back up.

Through these various tweaks, the robot learned how to walk autonomously across several different surfaces, including flat ground, a memory foam mattress, and a doormat with crevices. The work shows the potential for future applications that may require robots to navigate through rough and unknown terrain without the presence of a human.

“I think this work is quite exciting,” says Chelsea Finn, an assistant professor at Stanford who is also affiliated with Google but not involved with the research. “Removing the person from the process is really hard. By allowing robots to learn more autonomously, robots are closer to being able to learn in the real world that we live in, rather than in a lab.”

She cautions, however, that the setup currently relies on a motion capture system above the robot to determine its location. That won’t be possible in the real world.

Moving forward, the researchers hope to adapt their algorithm to different kinds of robots or to multiple robots learning at the same time in the same environment. Ultimately, Tan believes, cracking locomotion will be key to unlocking more useful robots.

“A lot of places are built for humans, and we all have legs,” he says. “If a robot cannot use legs, they cannot navigate the human world.”