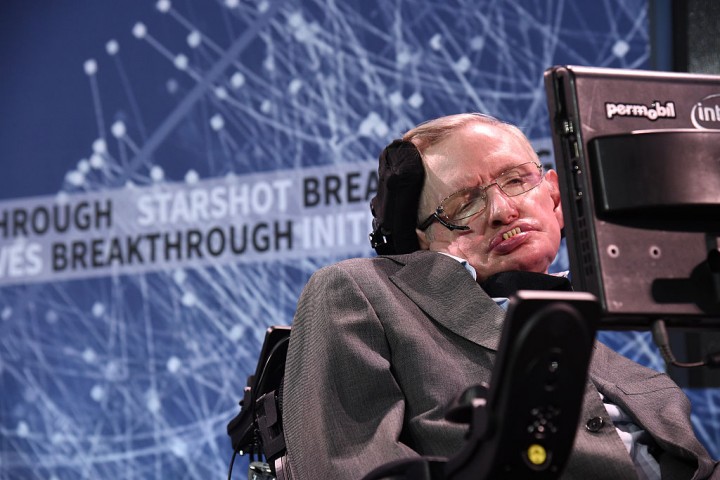

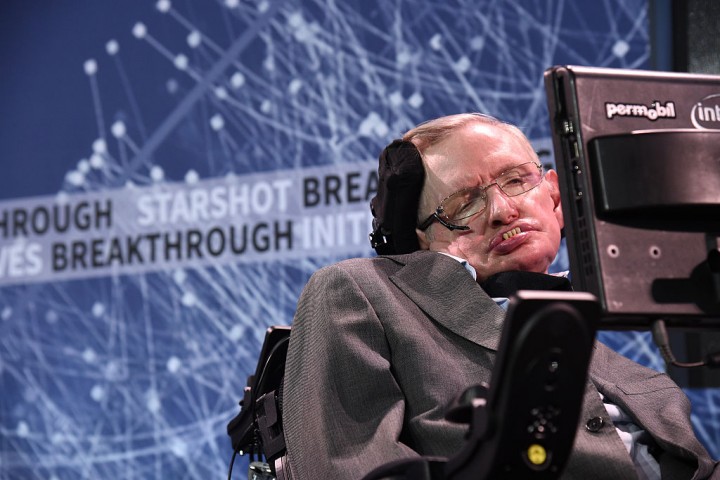

Stephen Hawking once said “artificial intelligence could spell the end of the human race.” This week, he announced his plans to play some role in making sure that doesn’t happen.

In recent years, Hawking has issued some rather apocalyptic warnings about artificial intelligence. “The development of full artificial intelligence could spell the end of the human race,” he told the BBC in 2014. So when the world’s most famous scientist praised the opening of a new A.I. research center earlier this week, the Earth wobbled slightly as a million heads turned at once.

On Wednesday, Hawking spoke at the official opening of the Leverhulme Centre for the Future of Intelligence (CFI) at Cambridge University. While Hawking tempered the rather dire tone of his previous conjecture on A.I., he didn’t mince his words, either.

“The rise of powerful A.I. will be either the best or the worst thing ever to happen to humanity,” Hawking said. “We do not yet know which.”

Hawking’s revised, cautiously neutral approach to A.I. is likely inspired by the mission of the new institution. Rather than work to develop the technology of artificial intelligence, CFI is dedicated to assessing the impact of A.I. on civilization. In fact, the research center hopes to proactively anticipate both the benefits and dangers of machine intelligence, and advocate for appropriate public policy.

CFI brings together four of the world’s leading research universities, Cambridge, Oxford, UC-Berkeley and Imperial College, London, and plans to establish an international, interdisciplinary community of researchers. The CFI will work with both government and industry to develop plans for specific issues — for example, the regulation of autonomous weapons.

According to press materials issued by Cambridge, the institute will initially focus on seven distinct projects in the first three-year phase of its work. Among the initial research topics:

“Science, value and the future of intelligence”

“Policy and responsible innovation”

“Autonomous weapons – prospects for regulation”

‘Trust and transparency’

It seems like much of the academic and scientific community is bracing for imminent arrival of advanced A.I. We recently reported on an A.I. ethics conference in New York City that addressed topics ranging from autonomous currency exchanges to sex robots. The government is getting in on preparations, too. On October 12, the White House issued a 60-page report Preparing for the Future of Artificial Intelligence.

The CFI is the most recent of several high-powered think tanks dedicated to assessing and prepping for A.I. Over in Cambridge, Mass., the Future of Life Institute has a broad mandate to safeguard life and develop optimistic visions, but much of its focus has been on A.I. specifically. At the University of Oxford, the Future of Humanity Institute has a similar mission statement.

Entrepreneur Elon Musk’s OpenAI organization is more overtly dedicated to developing A.I. technology, but clearly has big-picture concerns in mind regarding, you know, civilization itself. From the OpenAI website: “Our mission is to build safe A.I. and ensure A.I.’s benefits are as widely and evenly distributed as possible.”

Hawking’s endorsement of the new research center is important, if only from a public relations standpoint. As the planet’s resident rock star scientist, Hawking can effectively communicate to civilians in a way that other experts can’t. So if anyone has remaining doubts as to the proportional significance of A.I., Hawking’s assessment is blunt:

“The research done by this center will be crucial to the future of our civilization and of our species,” Hawking said.